In-Short

- The Centre for Long-Term Resilience advocates for an AI incident reporting system in the UK.

- Over 10,000 AI safety incidents have been reported since 2014, highlighting the need for regulation.

- Recommendations include a government reporting system, engaging experts, and building DSIT capacity.

Summary of AI Incident Reporting Needs

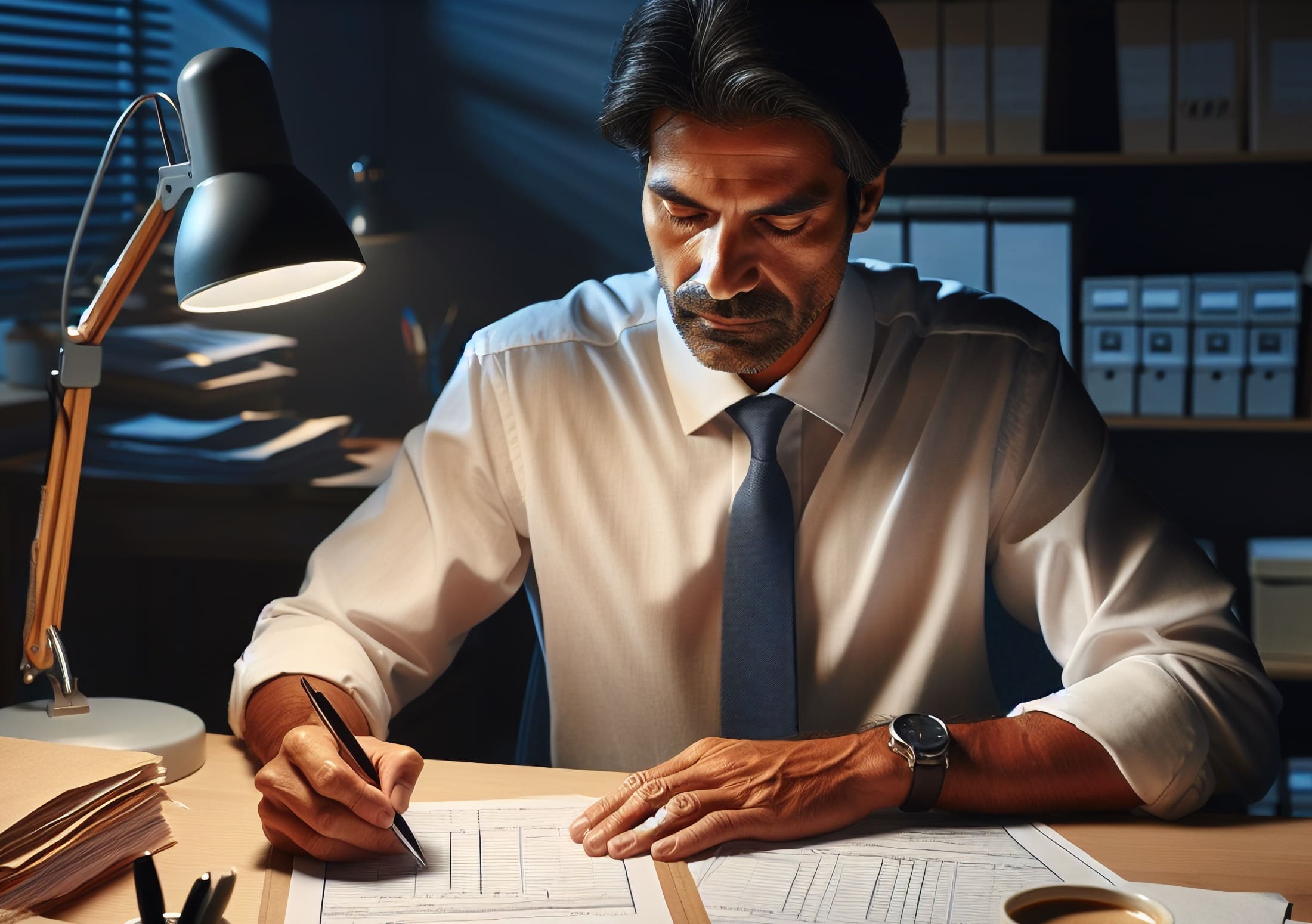

The Centre for Long-Term Resilience (CLTR) has emphasized the urgency of establishing a comprehensive AI incident reporting system to fill a significant void in the UK’s AI regulatory framework. With AI’s growing integration into society and a track record of over 10,000 reported safety incidents, the think tank stresses the importance of a reporting regime akin to those in aviation and medicine.

Such a system would enable monitoring of AI risks, coordination of responses to major incidents, and early detection of potential future harms. The current UK AI regulation does not provide the Department for Science, Innovation & Technology (DSIT) with sufficient visibility on critical incidents, which could include misuse of AI or harms caused by AI in public services.

To combat this, the CLTR recommends the UK Government take immediate action by establishing a public sector AI incident reporting system, consulting with regulators and experts to identify and address gaps, and enhancing DSIT’s capabilities to manage AI incidents.

Veera Siivonen, CCO and Partner at Saidot, supports the report’s timing and its call for a balance between regulation and innovation. Siivonen advocates for clear governance requirements and a variety of AI governance strategies to maximize AI’s economic and societal benefits while maintaining public trust.

Call to Action

For more detailed insights, visit the original article on the Centre for Long-Term Resilience’s call for an AI incident reporting system.